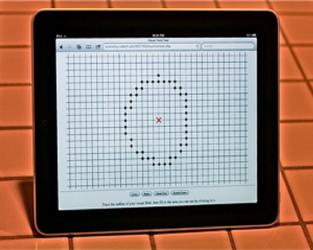

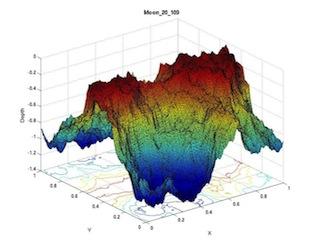

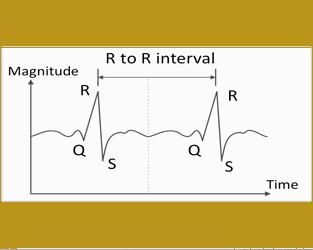

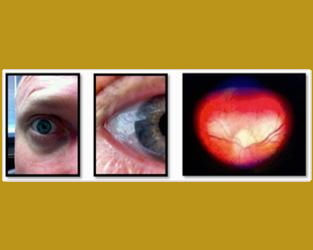

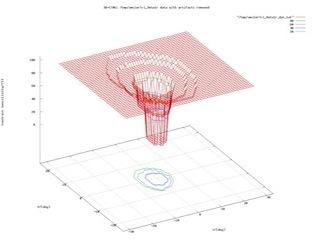

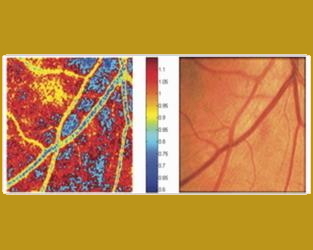

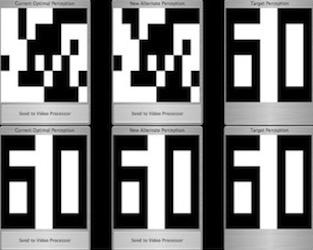

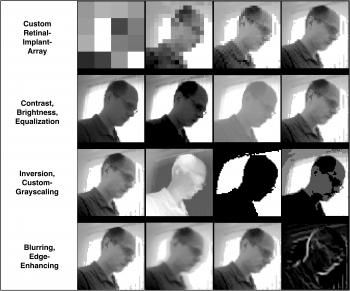

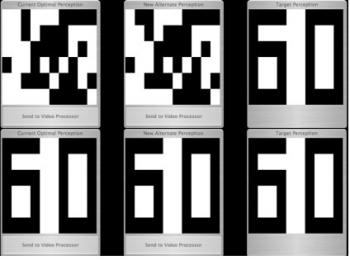

The human retina is not a mere receptor array for photonic information. It performs significant image processing within its layered neural network structure. Current state-of-the-art and near-future artificial vision implants provide tens of electrodes, allowing for limited resolution visual perception (pixelation). Real-time image processing and enhancement improve the limited vision afforded by camera-driven implants, such as the Artificial Retina, ultimately benefiting the subject. The preservation and enhancement of contrast differences and transitions, such as edges, are especially important compared to picture details such as object texture. The Artificial Vision Support System (AVS2; Fink and Tarbell, 2005), devised and implemented under direction of Dr. Wolfgang Fink at the Visual and Autonomous Exploration Systems Research Laboratory at Caltech, performs real-time image processing and enhancement of the miniature camera image stream before it is fed into the Artificial Retina. This research was part of the collaborative NSF-funded BMES ERC and U.S. Department of Energy-funded Artificial Retina Project designed to restore sight to the blind. Since it is difficult to predict exactly what blind subjects with camera-driven visual prostheses, such as the Artificial Retina, may be able to perceive, AVS2 provides the unique capability for current and future retinal implant carriers to choose from a wide variety of image processing filters to optimize their individual visual perception provided by their visual prostheses. AVS2 interfaces with a wide variety of digital cameras and is thus directly and immediately applicable to artificial vision prostheses that are based on an external or internal video-camera system as the first step in the vision stimulation/processing cascade. AVS2 presents the captured camera video stream in a user-defined pixelation, which matches, e.g., the dimensions of the implanted electrode array of the Artificial Retina. It subsequently processes the video data through user-selected image filters and then issues them to the Artificial Retina.

Sponsor(s):