Patents

U.S. 12,373,682: Sensor quality upgrade frameworkVarious examples related to upgrading low quality sensor output data in real time to provide high quality data suitable for, e.g., medical monitoring and diagnosis, or other non-medical analysis applications are presented. In one example, among others, a method includes training an artificial neural network (ANN) or learning logic based upon concurrent sensor output data from sensors to provide higher-quality sensor data from one of the sensors; obtaining subsequent sensor output data from the one sensor; and generating subsequent higher-quality sensor output data by applying the trained ANN or learning logic to the subsequent sensor output data. In another example, a device includes a sensor that generates higher-quality sensor output data using a trained ANN or learning logic. In another example, a system includes a mobile user device that receives low-quality sensor output data from a sensor and generates higher-quality sensor output data using a trained ANN or learning logic.

U.S. 12,065,237: Flight duration enhancement for single rotorcraft and multicoptersVarious examples are provided related to flight duration enhancement for rotorcraft and multicopters. In one example, a rotorcraft or multicopter includes one or more rotors, and one or more nozzles positioned in relationship to at least one corresponding rotor. The one or more nozzles can modulate, reshape, redirect, or adjust downwash produced by the corresponding rotor. The one or more nozzles can dynamically modulate, reshape, redirect, or adjust the downwash below the rotorcraft or multicopter. The one or more nozzles can be morphed or reshaped to dynamically modulate, reshape, redirect, or adjust the downwash using, e.g., a stochastic optimization framework and/or a motif-based auto-controller.

U.S. 11,960,815: Automated network-on-chip designVarious examples are provided related to automated chip design, such as a pareto-optimization framework for automated network-on-chip design. In one example, a method for network-on-chip (NoC) design includes determining network performance for a defined NoC configuration comprising a plurality of n routers interconnected through a plurality of intermediate links; comparing the network performance of the defined NoC configuration to at least one performance objective; and determining, in response to the comparison, a revised NoC configuration based upon iterative optimization of the at least one performance objective through adjustment of link allocation between the plurality of n routers. In another example, a method comprises determining a revised NoC configuration based upon iterative optimization of at least one performance objective through adjustment of a first number of routers to obtain a second number of routers and through adjustment of link allocation between the second number of routers.

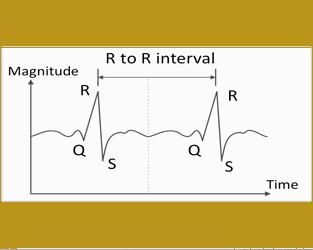

U.S. 11,766,182: Systems and methods for real-time signal processing and fittingVarious examples of methods and systems are provided for real-time signal processing. In one example, a method for processing data to select a pattern includes receiving data via a sensor, evaluating the data including waveforms over a time domain, averaging the waveforms to obtain a mean waveform, selecting a pattern based on the mean waveform, and generating a notification regarding the selected pattern. The pattern can include a start time, a hold time, and an end time. In another example, a system includes one or more sensors that detect the data and a mobile platform that evaluates the data, averages the waveforms to obtain the mean waveform and selects a pattern based on the mean waveform. A user interface can be used to communicate the notification regarding the selected pattern. The patterns can include breathing patterns, which can be used to reduce stress in a subject being monitored by the sensor. U.S. 11,737,667: Nanowired ultra-capacitor based power sources for implantable sensors and devicesVarious examples related to power sources for implantable sensors and/or devices are provided. In one example, a device for implantation in a subject includes circuitry for sensing an observable parameter of the subject and a power source comprising a nanowired ultra-capacitor (NUC), the power source having a volume of 10 mm3 or less. The NUC can have a surface capacitance density in a range from about 25 mF/cm2 to about 29 mF/cm2 or greater. Such devices can be used for, e.g., ocular diagnostic sensors or other implantable sensors that may be constrained by size limitations.

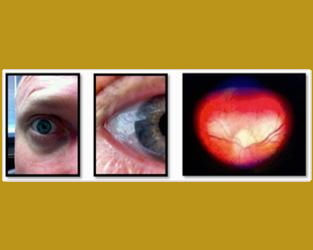

U.S. 11,684,256: Eye movement in response to visual stimuli for assessment of ophthalmic and neurological conditionsThe present invention generally relates to apparatus, software and methods for assessing ocular, ophthalmic, neurological, physiological, psychological and/or behavioral conditions. As disclosed herein, the conditions are assessed using eye-tracking technology that beneficially eliminates the need for a subject to fixate and maintain focus during testing or to produce a secondary (non-optical) physical movement or audible response, i.e., feedback. The subject is only required to look at a series of individual visual stimuli, which is generally an involuntary reaction. The reduced need for cognitive and/or physical involvement of a subject allows the present modalities to achieve greater accuracy, due to reduced human error, and to be used with a wide variety of subjects, including small children, patients with physical disabilities or injuries, patients with diminished mental capacity, elderly patients, animals, etc.

U.S. 11,544,441: Automated network-on-chip designVarious examples are provided related to automated chip design, such as a pareto-optimization framework for automated network-on-chip design. In one example, a method for network-on-chip (NoC) design includes determining network performance for a defined NoC configuration comprising a plurality of n routers interconnected through a plurality of intermediate links; comparing the network performance of the defined NoC configuration to at least one performance objective; and determining, in response to the comparison, a revised NoC configuration based upon iterative optimization of the at least one performance objective through adjustment of link allocation between the plurality of n routers. In another example, a method comprises determining a revised NoC configuration based upon iterative optimization of at least one performance objective through adjustment of a first number of routers to obtain a second number of routers and through adjustment of link allocation between the second number of routers.

U.S. 11,353,326: Traverse and trajectory optimization and multi-purpose trackingVarious examples are provided for object identification and tracking, traverse-optimization and/or trajectory optimization. In one example, a method includes determining a terrain map including at least one associated terrain type; and determining a recommended traverse along the terrain map based upon at least one defined constraint associated with the at least one associated terrain type. In another example, a method includes determining a transformation operator corresponding to a reference frame based upon at least one fiducial marker in a captured image comprising a tracked object; converting the captured image to a standardized image based upon the transformation operator, the standardized image corresponding to the reference frame; and determining a current position of the tracked object from the standardized image.

U.S. 11,110,878: Vehicular tip or rollover protection mechanismsVarious examples are provided related to tip or rollover protection mechanisms for ground vehicles. In one example, a vehicle includes a vehicle frame and one or more protection mechanism(s) secured to the vehicle frame. The protection mechanism can allow the vehicle to “land” right-side up after tipping or rolling over for continuing operation. This can be beneficial for, but not limited to, autonomous or remotely controlled vehicles. The protection mechanism can include protection mechanisms secured to opposite sides of the vehicle frame. The protection mechanism can be passive, active, actuated or a combination thereof.

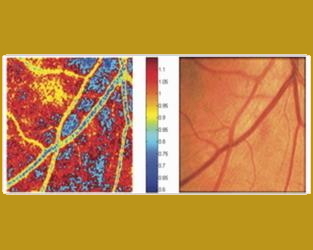

U.S. 10,842,373: Smartphone-based handheld ophthalmic examination devicesVarious examples of methods, systems and devices are provided for ophthalmic examination. In one example, a handheld system includes an optical imaging assembly coupled to a user device that includes a camera aligned with optics of the optical imaging assembly. The user device can obtain ocular imaging data of at least a portion of an eye via the optics of the optical imaging assembly and provide ophthalmic evaluation results based at least in part upon the ocular imaging data. In another example, a method includes receiving ocular imaging data of at least a portion of an eye; analyzing the ocular imaging data to determine at least one ophthalmic characteristic of the eye; and determining a condition based at least in part upon the at least one ophthalmic characteristic.

C.N. 107169526B: Method for automatic feature analysis, comparison and anomaly detectionThe application relates to a method of automatic feature analysis, comparison and anomaly detection. Novel methods and systems for automated data analysis are disclosed. The data may be automatically analyzed to determine features in different applications such as visual field analysis and comparison. Anomalies between groups of objects can be detected by clustering of objects.

J.P. 6,577,607: Automatic Feature Analysis, Comparison, and Anomaly DetectionNovel methods and systems for automated data analysis are disclosed. Data can be automatically analyzed to determine features in different applications, such as visual field analysis and comparisons. Anomalies between groups of objects may be detected through clustering of objects.

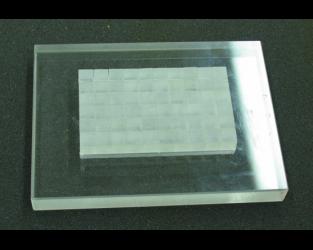

U.S. 10,265,529: Apparatus for electrical stimulation of cell and method of useThis invention provides an apparatus for electrically stimulating a cell and a method for using the same. In particular, the apparatus of the invention comprises an array of electrodes and a controller for actuating individual electrodes.

J.P. 6,272,892: Automatic Feature Analysis, Comparison, and Anomaly DetectionNovel methods and systems for automated data analysis are disclosed. Data can be automatically analyzed to determine features in different applications, such as visual field analysis and comparisons. Anomalies between groups of objects may be detected through clustering of objects.

U.S. 9,867,988: Apparatus for electrical stimulation of cell and method of use

This invention provides an apparatus for electrically stimulating a cell and a method for using the same. In particular, the apparatus of the invention comprises an array of electrodes and a controller for actuating individual electrodes.

C.N. 104769578B: The method of automatic feature analysis, comparison and abnormality detectionDiscloses a new method and system for automated data analysis. It may automatically analyze the data to determine the characteristics of different applications, such as in the field of view of the analysis and comparison. Exception between groups of objects can be detected by the clustering of objects.

U.S. 9,424,489: Automated feature analysis, comparison, and anomaly detectionNovel methods and systems for automated data analysis are disclosed. Data can be automatically analyzed to determine features in different applications, such as visual field analysis and comparisons. Anomalies between groups of objects may be detected through clustering of objects.

M.X. 339790: Automated feature analysis, comparison, and anomaly detectionNovel methods and systems for automated data analysis are disclosed. Data can be automatically analyzed to determine features in different applications, such as visual field analysis and comparisons. Anomalies between groups of objects may be detected through clustering of objects.

U.S. 9,122,956: Automated feature analysis, comparison, and anomaly detectionNovel methods and systems for automated data analysis are disclosed. Data can be automatically analyzed to determine features in different applications, such as visual field analysis and comparisons. Anomalies between groups of objects may be detected through clustering of objects.

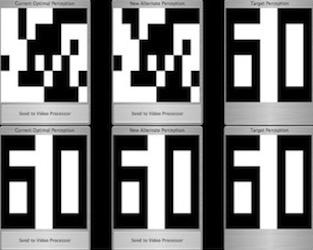

U.S. 8,260,428: Method and system for training a visual prosthesisA method for training a visual prosthesis includes presenting a non-visual reference stimulus corresponding to a reference image to a visual prosthesis patient. Training data sets are generated by presenting a series of stimulation patterns to the patient through the visual prosthesis. Each stimulation pattern in the series is determined at least in part on a received user perception input and a fitness function optimization algorithm. The presented stimulation patterns and the user perception inputs are stored and presented to a neural network off-line to determine a vision solution.

U.S. 8,078,309: Method to create arbitrary sidewall geometries in 3-dimensions using LIGA with a Stochastic Optimization FrameworkDisclosed herein is a method of making a three-dimensional mold comprising the steps of providing a mold substrate; exposing the substrate with an electromagnetic radiation source for a period of time sufficient to render the portion of the mold substrate susceptible to a developer to produce a modified mold substrate; and developing the modified mold with one or more developing reagents to remove the portion of the mold substrate rendered susceptible to the developer from the mold substrate, to produce the mold having a desired mold shape, wherein the electromagnetic radiation source has a fixed position, and wherein during the exposing step, the mold substrate is manipulated according to a manipulation algorithm in one or more dimensions relative to the electromagnetic radiation source; and wherein the manipulation algorithm is determined using stochastic optimization computations.

U.S. 7,762,664: Optomechanical and digital ocular sensor reader systemsSystem, methods, and devices are described for eye self-exam. In particular, optomechanical and digital ocular sensor reader systems are provided. The optomechanical system provides a device for viewing an ocular sensor implanted in one eye with the other eye. The digital ocular sensor system is a digital camera system for capturing an image of an eye, including an image of a sensor implanted in the eye.

U.S. 7,742,845: Multi-agent autonomous system and methodA method of controlling a plurality of crafts in an operational area includes providing a command system, a first craft in the operational area coupled to the command system, and a second craft in the operational area coupled to the command system. The method further includes determining a first desired destination and a first trajectory to the first desired destination, sending a first command from the command system to the first craft to move a first distance along the first trajectory, and moving the first craft according to the first command. A second desired destination and a second trajectory to the second desired destination are determined and a second command is sent from the command system to the second craft to move a second distance along the second trajectory.

U.S. 7,734,063: Multi-agent autonomous systemA multi-agent autonomous system for exploration of hazardous or inaccessible locations. The multi-agent autonomous system includes simple surface-based agents or craft controlled by an airborne tracking and command system. The airborne tracking and command system includes an instrument suite used to image an operational area and any craft deployed within the operational area. The image data is used to identify the craft, targets for exploration, and obstacles in the operational area. The tracking and command system determines paths for the surface-based craft using the identified targets and obstacles and commands the craft using simple movement commands to move through the operational area to the targets while avoiding the obstacles. Each craft includes its own instrument suite to collect information about the operational area that is transmitted back to the tracking and command system. The tracking and command system may be further coupled to a satellite system to provide additional image information about the operational area and provide operational and location commands to the tracking and command system.

U.S. 7,481,534: Optomechanical and digital ocular sensor reader systemsSystem, methods, and devices are described for eye self-exam. In particular, optomechanical and digital ocular sensor reader systems are provided. The optomechanical system provides a device for viewing an ocular sensor implanted in one eye with the other eye. The digital ocular sensor system is a digital camera system for capturing an image of an eye, including an image of a sensor implanted in the eye.

U.S. 7,321,796: Method and system for training a visual prosthesisA method for training a visual prosthesis includes presenting a non-visual reference stimulus corresponding to a reference image to a visual prosthesis patient. The visual prosthesis including a plurality of electrodes. Training data sets are generated by presenting a series of stimulation patterns to the patient through the visual prosthesis. Each stimulation pattern in the series, after the first, is determined at least in part on a previous subjective patient selection of a preferred stimulation pattern among stimulation patterns previously presented in the series and a fitness function optimization algorithm. The presented stimulation patterns and the selections of the patient are stored and presented to a neural network off-line to determine a vision solution.

U.S. 7,131,945: Optically powered and optically data-transmitting wireless intraocular pressure sensor deviceAn implantable intraocular pressure sensor device for detecting excessive intraocular pressure above a predetermined threshold pressure is disclosed. The device includes a pressure switch that is sized and configured to be placed in an eye, wherein said pressure switch is activated when the intraocular pressure is higher than the predetermined threshold pressure. The device is optically powered and transmits data wirelessly using optical energy. In one embodiment, the pressure sensor device is a micro electromechanical system.

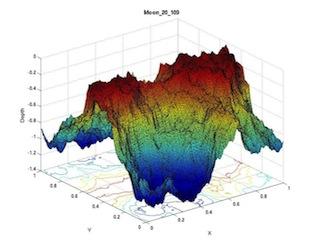

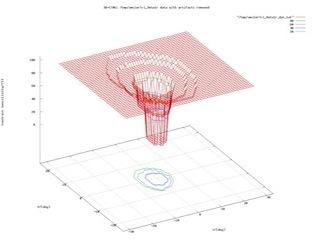

U.S. 7,101,044: Automated objective characterization of visual field defects in 3DA method and apparatus for electronically performing a visual field test for a patient. A visual field test pattern is displayed to the patient on an electronic display device and the patient's responses to the visual field test pattern are recorded. A visual field representation is generated from the patient's responses. The visual field representation is then used as an input into a variety of automated diagnostic processes. In one process, the visual field representation is used to generate a statistical description of the rapidity of change of a patient's visual field at the boundary of a visual field defect. In another process, the area of a visual field defect is calculated using the visual field representation. In another process, the visual field representation is used to generate a statistical description of the volume of a patient's visual field defect.

U.S. 6,990,406: Multi-agent autonomous systemA multi-agent autonomous system for exploration of hazardous or inaccessible locations. The multi-agent autonomous system includes simple surface-based agents or craft controlled by an airborne tracking and command system. The airborne tracking and command system includes an instrument suite used to image an operational area and any craft deployed within the operational area. The image data is used to identify the craft, targets for exploration, and obstacles in the operational area. The tracking and command system determines paths for the surface-based craft using the identified targets and obstacles and commands the craft using simple movement commands to move through the operational area to the targets while avoiding the obstacles. Each craft includes its own instrument suite to collect information about the operational area that is transmitted back to the tracking and command system. The tracking and command system may be further coupled to a satellite system to provide additional image information about the operational area and provide operational and location commands to the tracking and command system.

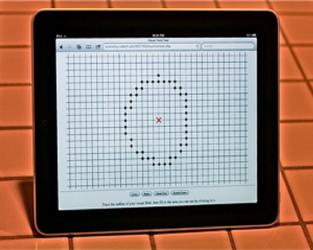

U.S. 6,769,770: Computer-based 3D visual field testing with peripheral fixation pointsA method and apparatus for electronically performing a visual field test for a patient. A visual field test pattern including a peripheral fixation point is displayed to the patient on an electronic display device and the patient's responses to the visual field test pattern are recorded. A visual field representation is generated from the patient's responses. The visual field representation is then used as an input into a variety of diagnostic processes. In one embodiment of the invention, a series of visual test patterns of varying contrast levels or colors are presented to a patient in order to construct a three-dimensional visual field representation wherein contrast sensitivity is plotted as the Z-axis.

E.P. 1276411: Computer-based 3d visual field test methodA method and apparatus for electronically performing a visual field test for a patient. A visual field test pattern is displayed to the patient on an electronic display device and the patient's responses to the visual field test pattern are recorded. A visual field representation is generated from the patient's responses. The visual field representation is then used as an input into a variety of diagnostic processes. In one embodiment of the invention, a series of visual test patterns of varying contrast are presented to a patient in order to construct a three-dimensional visual field representation wherein contrast sensitivity is plotted against a Z-axis.

U.S. 6,578,966: Computer-based 3D visual field test system and analysisA method and apparatus for electronically performing a visual field test for a patient. A visual field test pattern is displayed to the patient on an electronic display device and the patient's responses to the visual field test pattern are recorded. A visual field representation is generated from the patient's responses. The visual field representation is then used as an input into a variety of diagnostic processes. In one embodiment of the invention, a series of visual test patterns of varying contrast are presented to a patient in order to construct a three-dimensional visual field representation wherein contrast sensitivity is plotted against a Z-axis.

|